Semantic navigation

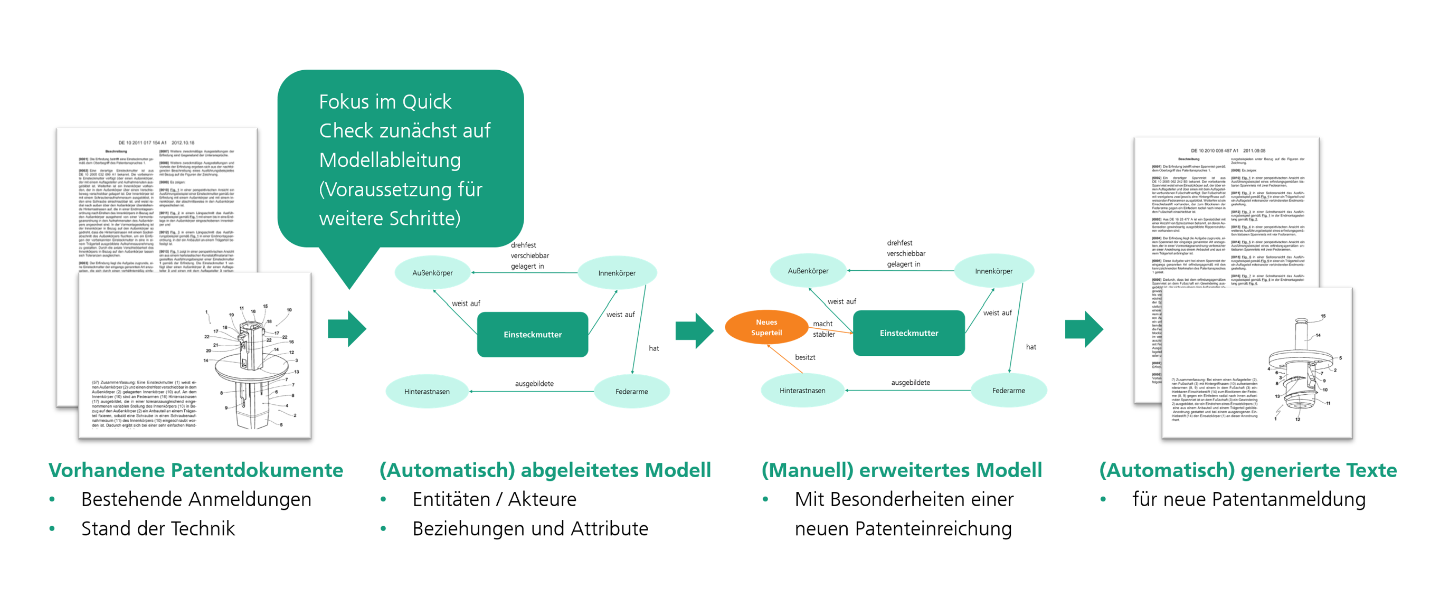

Initial situation

Autonomous mobile robots carry out transportation tasks in logistics independently. They navigate in a dynamic environment with, for example, tugger trains, forklift trucks or people. Autonomous navigation uses 2D laser scanners to capture the environment. However, their environmental information is limited, meaning that semantics cannot be reliably recognized. An AI-based solution can improve this. To ensure smooth operation, the robot must adapt its behavior to the situation so that it can give priority to tugger trains, for example. However, there are no object/image databases for tugger trains, forklift trucks, etc. yet. As a result, available AI models are unable to recognize these objects.

Solution idea

A complex understanding of the environment can be generated using visual perception algorithms and managed and analyzed in an environment model developed by Fraunhofer IPA. This is possible if state-of-the-art AI methods can be adapted to recognize the relevant object classes.

Benefit

Environment perception, i.e. AI-based classification

of objects, provides knowledge for decision-making and planning and enables robots to be controlled using cognitive functions. In future, the robot will be able to differentiate between objects in the environment so that navigation planning can be adapted depending on the semantics. Based on the aggregated knowledge, heat maps can be created, allowing the robot to avoid highly frequented areas at peak times, for example. This makes it more productive.

Implementation of the AI application

Evaluation of available methods and adaptability to the use case. The implementation includes:

-Instance segmentation with FastSAM

-Object recognition using a modern model YOLOv8

-Data expansion through the use of diffusion models

-Application of models based on Large Langua-

models (LLM) of the open vocabulary to the rare object classes

-Instance segmentation with FastSAM